There were a few typos and other problems in the paper that was posted on Tuesday. The ones that I noticed have been corrected, resulting in a new version of the paper. The original version is preserved at:

http://dl.dropbox.com/u/24876056/CIS565/Old/Paper.pdf

CIS 565 - Final Project

This blog covers the implementation of my final project for the GPU programming and architecture class at the University of Pennsylvania. The aim of the project is to create a deferred renderer that uses screen space classification to determine whether lighting or a post-processing effect need be applied to a certain tile in screen space.

Thursday, April 28, 2011

Tuesday, April 26, 2011

Final Project Deliverables

The project is complete, links to the deliverables follow:

Tuesday, April 19, 2011

Per-object Motion Blur

Today, I was able to add per-object screen-space motion blur. Basically, an object is flagged if it is "animated" (I use the term loosely here because animation in my project just covers a static object moving in a circular path). A screen-space velocity is calculated by comparing the position using the model matrix for the current frame with the position calculated using the model matrix from the previous frame. Changing the view does not actually add any motion-blur. Then, I used the technique in 27.3 of GPU Gems 3, to actually perform the motion blur. As an additional step, the shader also checks the object ID of the sampled pixel to determine whether it belongs to the same object. This way, the blurring effect avoids weird discontinuities that would arise from sampling the non-moving background objects.

One limitation of this method is that the outlines of the objects aren't blurred. Thus, the effect doesn't look exactly right because the outline of the object is still sharply defined. Posted below are two sample images. The top has motion blur enabled, while the bottom picture doesn't. Only one of the spiders and the monkey head are "animated".

One limitation of this method is that the outlines of the objects aren't blurred. Thus, the effect doesn't look exactly right because the outline of the object is still sharply defined. Posted below are two sample images. The top has motion blur enabled, while the bottom picture doesn't. Only one of the spiders and the monkey head are "animated".

Need glasses?

I was able to implement a first-pass attempt at edge blurring today. The idea is that this can approximate some sort real anti-aliasing. While I am aware that there are much better methods for anti-aliasing in a deferred shader, I am just looking to do something rather simple. Anyway, I used the following link as a inspiration for my edge detection shader: forum post. However, my actual shader for edge detection looks very different; I just briefly looked at the post.

The previous picture shows my edge detector in action. One sort of obvious problem is that some of the lines appear to be thicker than they should be. This will result in more pixels getting blurred than necessary. When I generated the edges, I also generated a horizontal and vertical blur texture. My hope was that I could combine a weighting of these two blurs to achieve a general blur and motion directed blurs. I'm convinced that such a method won't work anymore. I think I will just have to use weighted box blurs for both. A side-by-side comparison of the edge-blur effect with an unblurred image follows:

If you can't tell, the unblurred image is on the right, and the blurred image is on the left. To me, instead of making it appear that there edges are less aliased, the effect of the edge blur is to make the whole image look blurry (hence the title of this post). There is some anti-aliasing effect taking place; for instance, look at the legs of the spider on either side of the image. The whole image might look less blurry if every surface was textured. I guess that I should check the filter with the sponza scene before I discount edge-blurring entirely.

The previous picture shows my edge detector in action. One sort of obvious problem is that some of the lines appear to be thicker than they should be. This will result in more pixels getting blurred than necessary. When I generated the edges, I also generated a horizontal and vertical blur texture. My hope was that I could combine a weighting of these two blurs to achieve a general blur and motion directed blurs. I'm convinced that such a method won't work anymore. I think I will just have to use weighted box blurs for both. A side-by-side comparison of the edge-blur effect with an unblurred image follows:

If you can't tell, the unblurred image is on the right, and the blurred image is on the left. To me, instead of making it appear that there edges are less aliased, the effect of the edge blur is to make the whole image look blurry (hence the title of this post). There is some anti-aliasing effect taking place; for instance, look at the legs of the spider on either side of the image. The whole image might look less blurry if every surface was textured. I guess that I should check the filter with the sponza scene before I discount edge-blurring entirely.

Friday, April 15, 2011

Preliminary Results

After working nearly non-stop for the past couple of days, I finally finished a first-pass attempt at performing the point light calculations in CUDA. This took a lot longer than it probably should have due to lingering bit-packing issues, unfamiliarity with CUDA/OpenGL interop, and the fact that I didn't realize at first that you can't write to a texture in a CUDA kernel. The good news is that the output is nearly identical to both the forward and regular deferred rendering path. The bad news is that the CUDA kernel is slow. For instance, using the default scene with 1024 points lights, I get the following results (quantities are FPS):

Which is pretty discouraging. I still need to implement post-processing effects, so I'm going to hold off on trying to tune the kernel for now. The code is posted below, if anyone has any suggestions though.

| CUDA | Deferred | Forward |

|---|---|---|

| 17.80 | 57.09 | 9.82 |

Which is pretty discouraging. I still need to implement post-processing effects, so I'm going to hold off on trying to tune the kernel for now. The code is posted below, if anyone has any suggestions though.

__global__ void cudaCalculation41(unsigned int numPointLights, float *plFloats, unsigned int *srBounds,

float *invPersp, float *view, int width, int height, uchar4 *outData) {

__shared__ float iP[16];

__shared__ float v[16];

__shared__ bool tileInRect[MAX_BLOCK_SIZE];

__shared__ float pLightInfo[8];

int tdx = threadIdx.x + blockDim.x*threadIdx.y;

int blockS = blockDim.x * blockDim.y;

// Load the inverse perspective and view matrices

if(blockS < 32) {

if(tdx < 16) {

iP[tdx] = invPersp[tdx];

v[tdx] = view[tdx];

}

}

else {

if(tdx < 16) {

iP[tdx] = invPersp[tdx];

}

else if(tdx < 32) {

v[tdx - 16] = view[tdx - 16];

}

}

__syncthreads();

// Calculate texture read coordinates

ushort2 coords;

coords.x = blockDim.x * blockIdx.x + threadIdx.x;

coords.y = blockDim.y * blockIdx.y + threadIdx.y;

// Get the depth and VS position

uchar4 txRead = tex2D(depthTexRef, coords.x, coords.y);

unsigned int depthUInt = txRead.x + txRead.y*256 + txRead.z*256*256 + txRead.w*256*256*256;

float depth = __int_as_float(depthUInt);

float3 vpos = vsPosition(depth, (float)coords.x/width, (float)coords.y/height, iP);

// Get the normal

txRead = tex2D(normalTexRef, coords.x, coords.y);

float n1 = txRead.x/(256.0f*255.0f) + txRead.y/255.0f;

float n2 = txRead.z/(256.0f*255.0f) + txRead.w/255.0f;

n1 = n1*4.0f - 2.0f;

n2 = n2*4.0f - 2.0f;

float f = n1*n1 + n2*n2;

float g = sqrt(1.0f - .25f*f);

float3 normal = make_float3(n1*g, n2*g, 1.0f - .5f*f);

// Get the tile rect-bounds

ushort4 pBounds;

pBounds.x = blockIdx.x * blockDim.x;

pBounds.y = blockIdx.y * blockDim.y;

pBounds.z = pBounds.x + blockDim.x - 1;

pBounds.w = pBounds.y + blockDim.y - 1;

// Initialize color accumulation buffer

float3 color_accum = make_float3(0,0,0);

bool onScreen = (coords.x < width) && (coords.y < height);

uchar4 diffuse;

if(onScreen) {

diffuse = tex2D(diffuseTexRef, coords.x, coords.y);

}

unsigned int numLoops = numPointLights / blockS + (numPointLights % blockS == 0 ? 0 : 1);

// Loop through for all of the lights

for(unsigned int i = 0; i < numLoops; ++i) {

int index = i*blockS + tdx;

// Check to see if a light is in bounds

if(index < numPointLights) {

tileInRect[tdx] = !((srBounds[index] > pBounds.z) ||

(srBounds[index + numPointLights] > pBounds.w) ||

(srBounds[index + 2*numPointLights] < pBounds.x) ||

(srBounds[index + 3*numPointLights] < pBounds.y));

}

else

tileInRect[tdx] = false;

__syncthreads();

// For each light that was in bounds, calculate the light contribution

for(unsigned int j = 0; j < blockS; ++j) {

if(tileInRect[j]) {

// Load the info for a single light into shared memory

if(tdx < 8) {

pLightInfo[tdx] = plFloats[(i*blockS+j)*8 + tdx];

}

__syncthreads();

// Only perform light calculation if the thread corresponds to an on-screen coordinate

if(onScreen) {

float3 lpos = mMult3(v, make_float4(pLightInfo[0], pLightInfo[1], pLightInfo[2], 1.0f));

float d = dist(lpos, vpos);

float attenuate = 1.0f;

if(d > pLightInfo[6])

attenuate = .0f;

else if(d > pLightInfo[7])

attenuate -= (d - pLightInfo[7])/(pLightInfo[6] - pLightInfo[7]);

// N*L*attenuation

float3 lDir = normalize(make_float3(lpos.x - vpos.x, lpos.y - vpos.y, lpos.z - vpos.z));

float factor = dMax(dot(lDir,normal), 0.0f)*attenuate;

// Accumulate the color contribution from the current light

color_accum.x += factor*pLightInfo[3];

color_accum.y += factor*pLightInfo[4];

color_accum.z += factor*pLightInfo[5];

}

__syncthreads();

}

}

}

// Multiply light accumulation by the texture color

if(onScreen)

outData[coords.x + width*coords.y] = make_uchar4(pointFactor*color_accum.x*diffuse.x,

pointFactor*color_accum.y*diffuse.y,

pointFactor*color_accum.z*diffuse.z, 255);

}

Bit-Packing: Depth and Normals

When I discovered that CUDA wouldn't let me register a texture that was of the GL_DEPTH_COMPONENT type, I decided that I needed to write the values of depth into a texture in my g-buffer shader. Also, I had been neglected the task of actually packing the 16-bit normal components into two different channels of an 8-bit RGBA texture. After some searching, I can across the following code for "packing" 16- or 32-bit information into 8-bit channels.

The problem with this is that it doesn't actually preserve the identical bit information from the 32-bit float. Some amount of precision is lost, which leads to an obvious difference between forward and deferred rendering as shown below. The picture on the left is forward, and the picture on the right is deferred.

If you look at the white spot of light in the center of each region, you can see that the one on the left is less sharp, indicating that the associated light source might have been further away.

GLSL 4.0 (and earlier versions with the proper extensions defined) supports direct manipulation of the bits and convenient functions for packing/unpacking floats into integer formats. This allows you to preserve perfect bit information. Unfortunately, I couldn't figure out how to read/output texture data as anything but normalized floating point numbers, even when I requested an internal type of unsigned int. I'm assuming that it is possible to do otherwise, I just couldn't figure out how. Anyway, I was able to develop packing/unpacking methods that seem to do a perfect bit pack/unpack, but I'm a little wary due to the (forced) conversion to normalized floats. The code I ended up using is:

The only problem with this is that it is a bit slower than the less accurate methods posted above. Initially, I thought that they were much slower, but apparently I changed the lighting calculations somewhere between my 3.3 version shaders and my 4.1 version shaders. If anyone comes across this blog and has a more efficient way of packing/unpacking 32- and 16-bit floats into 8-bit RGBA channels, please leave something in the comments.

// Packing and unpacking code courtesy of:

// http://www.ozone3d.net/blogs/lab/20080604/glsl-float-to-rgba8-encoder/

// http://olivers.posterous.com/linear-depth-in-glsl-for-real

vec4 packFloatTo4x8(in float val) {

const vec4 bitSh = vec4(256.0f*256.0f*256.0f, 256.0f*256.0f, 256.0f, 1.0f);

const vec4 bitMsk = vec4(0.0f, 1.0f/256.0f, 1.0f/256.0f, 1.0f/256.0f);

vec4 result = fract(val * bitSh);

result -= result.xxyz * bitMsk;

return result;

}

vec4 pack2FloatTo4x8(in vec2 val) {

const vec2 bitSh = vec2(256.0f, 1.0f);

const vec2 bitMsk = vec2(0.0f, 1.0f/256.0f);

vec2 res1 = fract(val.x * bitSh);

res1 -= res1.xx * bitMsk;

vec2 res2 = fract(val.y * bitSh);

res2 -= res2.xx * bitMsk;

return vec4(res1.x,res1.y,res2.x,res2.y);

}

float unpack4x8ToFloat(in vec4 val) {

const vec4 unshift = vec4(1.0f/(256.0f*256.0f*256.0f), 1.0f/(256.0f*256.0f), 1.0f/256.0f, 1.0f);

return dot(val, unshift);

}

vec2 unpack4x8To2Float(in vec4 val) {

const vec2 unshift = vec2(1.0f/256.0f, 1.0f);

return vec2(dot(val.xy, unshift), dot(val.zw, unshift));

}

The problem with this is that it doesn't actually preserve the identical bit information from the 32-bit float. Some amount of precision is lost, which leads to an obvious difference between forward and deferred rendering as shown below. The picture on the left is forward, and the picture on the right is deferred.

If you look at the white spot of light in the center of each region, you can see that the one on the left is less sharp, indicating that the associated light source might have been further away.

GLSL 4.0 (and earlier versions with the proper extensions defined) supports direct manipulation of the bits and convenient functions for packing/unpacking floats into integer formats. This allows you to preserve perfect bit information. Unfortunately, I couldn't figure out how to read/output texture data as anything but normalized floating point numbers, even when I requested an internal type of unsigned int. I'm assuming that it is possible to do otherwise, I just couldn't figure out how. Anyway, I was able to develop packing/unpacking methods that seem to do a perfect bit pack/unpack, but I'm a little wary due to the (forced) conversion to normalized floats. The code I ended up using is:

vec4 packFloatTo4x8(in float val) {

uint a = floatBitsToInt(val);

return vec4((bitfieldExtract(a,0,8))/256.0f,

(bitfieldExtract(a,8,8))/256.0f,

(bitfieldExtract(a,16,8))/256.0f,

(bitfieldExtract(a,24,8))/256.0f);

}

float unpack4x8ToFloat(in vec4 val) {

uint a = uint(val.x*256.0f+.5f) +

uint(val.y*256.0f+.5f)*256u +

uint(val.z*256.0f+.5f)*256u*256u+

uint(val.w*256.0f+.5f)*256u*256u*256u;

return uintBitsToFloat(a);

}

vec4 pack2FloatTo4x8(in vec2 val) {

uint a = packUnorm2x16(val);

return vec4((bitfieldExtract(a,0,8))/256.0f,

(bitfieldExtract(a,8,8))/256.0f,

(bitfieldExtract(a,16,8))/256.0f,

(bitfieldExtract(a,24,8))/256.0f);

}

vec2 unpack4x8To2Float(in vec4 val) {

uint a = uint(val.x*256.0f+.5f) +

uint(val.y*256.0f+.5f)*256u +

uint(val.z*256.0f+.5f)*256u*256u+

uint(val.w*256.0f+.5f)*256u*256u*256u;

return unpackUnorm2x16(a);

}

The only problem with this is that it is a bit slower than the less accurate methods posted above. Initially, I thought that they were much slower, but apparently I changed the lighting calculations somewhere between my 3.3 version shaders and my 4.1 version shaders. If anyone comes across this blog and has a more efficient way of packing/unpacking 32- and 16-bit floats into 8-bit RGBA channels, please leave something in the comments.

Tuesday, April 12, 2011

16-bit color accumulation target and scissor rects

Rendering point lights onto flat surfaces with no texturing leads to annoying quantization rings that are very visible when only using 8-bits per color. This problem is even worse when two point lights cover the same area, which makes a diamond shaped pattern as in the included screenshot:

In order to help combat this, I changed the forward and basic deferred rendering path to perform the color accumulation from lighting into a 16-bit per channel texture. While this doesn't get rid of the ring effect from a single point light, it does fix the diamond pattern when two point lights intersect. Also, rendering into a texture is better anyway because it is what I will need to do when I start working on post processing effects; plus, if I want to actually perform some sort of HDR scaling to the accumulated colors, this will make it easy to do so.

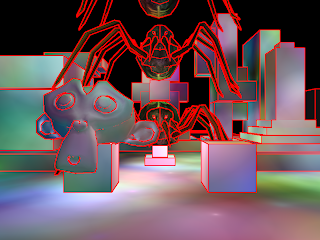

I also implemented a seemingly correct scissoring rectangle calculation for point lights. It speeds up the deferred shading render by a significant amount depending on the scene and the size of the point lights. On the simple scene (spiders + monkey head + cuboids), the deferred shader is twice as fast as the forward renderer with moderate sized point lights. As the point lights get smaller, this gap in performance increases, with the forward render staying constant and the deferred renderer getting faster. I've attached a picture showing giving a rough view of the scissoring rectangles:

The next step will be to write the tile-based light calculation using CUDA.

In order to help combat this, I changed the forward and basic deferred rendering path to perform the color accumulation from lighting into a 16-bit per channel texture. While this doesn't get rid of the ring effect from a single point light, it does fix the diamond pattern when two point lights intersect. Also, rendering into a texture is better anyway because it is what I will need to do when I start working on post processing effects; plus, if I want to actually perform some sort of HDR scaling to the accumulated colors, this will make it easy to do so.

I also implemented a seemingly correct scissoring rectangle calculation for point lights. It speeds up the deferred shading render by a significant amount depending on the scene and the size of the point lights. On the simple scene (spiders + monkey head + cuboids), the deferred shader is twice as fast as the forward renderer with moderate sized point lights. As the point lights get smaller, this gap in performance increases, with the forward render staying constant and the deferred renderer getting faster. I've attached a picture showing giving a rough view of the scissoring rectangles:

The next step will be to write the tile-based light calculation using CUDA.

Subscribe to:

Posts (Atom)